Since the launch of our expanded research data management (RDM) program in January, the Research Data Management Team in DVS has been busy defining and implementing our suite of services. Our “Lifecycle Services” are designed to assist scholars at all stages of their research project from the planning phase to the final curation and disposition of their data in an archive or repository. Our service model centers on four key areas: data management planning, data workflow design, data and documentation review, and data repository support. Over the past nine months, we have worked with Duke researchers across disciplines to provide these services, allowing us to see their value in action. Below we present some examples of how we have supported researchers within our four support areas.

Data Management Planning

With increasing data management plan requirements  as well as growing expectations that funding agencies will more strictly enforce and evaluate these plans, researchers are seeking assistance ensuring their plans comply with funder requirements. Through in-person consultations and online review through the DMPTool, we have helped researchers enhance their DMPs for a variety of funding agencies including the NSF Sociology Directorate, the Department of Energy, and the NSF Computer & Information Science & Engineering (CISE) Program.

as well as growing expectations that funding agencies will more strictly enforce and evaluate these plans, researchers are seeking assistance ensuring their plans comply with funder requirements. Through in-person consultations and online review through the DMPTool, we have helped researchers enhance their DMPs for a variety of funding agencies including the NSF Sociology Directorate, the Department of Energy, and the NSF Computer & Information Science & Engineering (CISE) Program.

Data Workflow Design

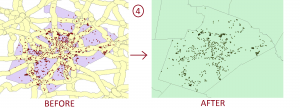

As research teams begin a project there are a variety  of organizational and workflow decisions that need to be made from selecting appropriate tools to implementing storage and backup strategies (to name a few). Over the past 6 months, we have had the opportunity to help a multi-institutional Duke Marine Lab Behavioral Response Study (BRS) implement their project workflow using the Open Science Framework (OSF). We have worked with project staff to think through the organization of materials, provided training on the use of the tool, and strategized on storage and backup options.

of organizational and workflow decisions that need to be made from selecting appropriate tools to implementing storage and backup strategies (to name a few). Over the past 6 months, we have had the opportunity to help a multi-institutional Duke Marine Lab Behavioral Response Study (BRS) implement their project workflow using the Open Science Framework (OSF). We have worked with project staff to think through the organization of materials, provided training on the use of the tool, and strategized on storage and backup options.

Data and Documentation Review

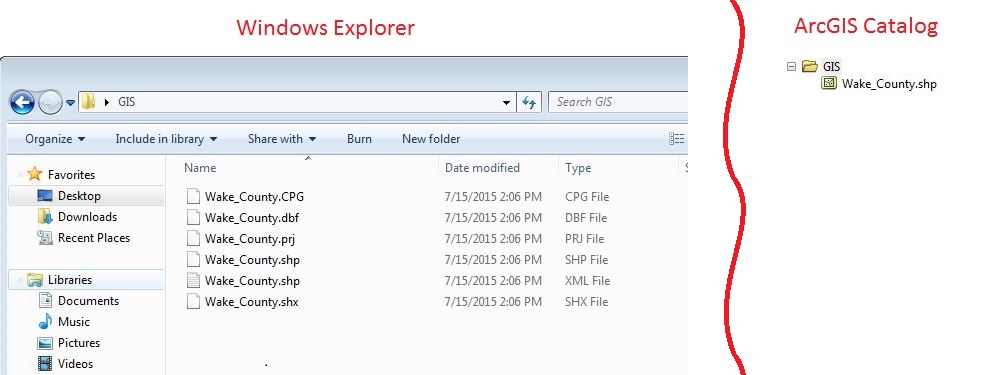

During a project, researchers make decisions about how to format,  describe, and structure their data for sharing and preservation. Questions may also arise surrounding how to ethically share human subjects data and navigate intellectual property or copyright issues. In conversations with researchers, we have provided suggestions for what formats are best for portability and preservation, discussed their documentation and metadata plans, and helped resolve intellectual property questions for secondary data.

describe, and structure their data for sharing and preservation. Questions may also arise surrounding how to ethically share human subjects data and navigate intellectual property or copyright issues. In conversations with researchers, we have provided suggestions for what formats are best for portability and preservation, discussed their documentation and metadata plans, and helped resolve intellectual property questions for secondary data.

Data Repository Support

At the end of a project, researchers may be required  or choose to deposit their data in an archive or repository. We have advised faculty and students on repository options based on their discipline, data type, and repository features. One option available to the Duke community is the Duke Digital Repository. Over the past nine months, we have assisted with the curation of a variety of datasets deposited within the DDR, many of which underlie journal publications.

or choose to deposit their data in an archive or repository. We have advised faculty and students on repository options based on their discipline, data type, and repository features. One option available to the Duke community is the Duke Digital Repository. Over the past nine months, we have assisted with the curation of a variety of datasets deposited within the DDR, many of which underlie journal publications.

This year Duke news articles have featured two research studies with datasets archived within the DDR, one describing a new cervical cancer screening device and another presenting cutting-edge research on a potential new state of matter. The accessibility of both Asiedu et al.’s screening device data and Charbonneau and Yaida’s glass study data enhances the overall transparency and reproducibility of these studies.

Our experiences thus far have enabled us to better understand the diversity of researchers’ needs and allowed us to continue to hone and expand our knowledge base of data management best practices, tools, and resources. We are excited to continue to work with and learn from researchers here at Duke!