During the last 8 months that I’ve worked at Duke, I’ve noticed a lot of squirrels. They seem to be everywhere on this campus, and, not only that, they come closer than any squirrels that I’ve ever seen. In fact, while working outside yesterday, and squirrel hopped onto our table and tried to take an apple from us. It’s become a bit of a joke in my department, actually. We take every opportunity we can to make a squirrel reference.

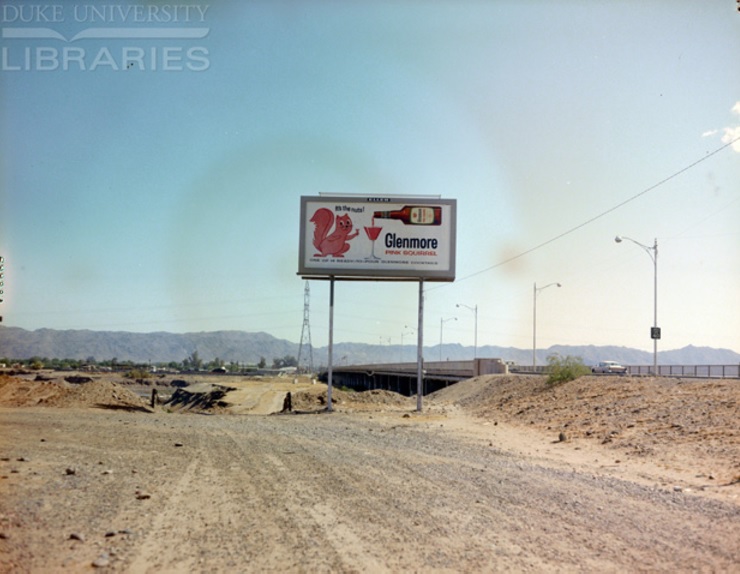

Anyhow, since we talk about squirrels so often, I decided I’d run a search in our digital collections to see what I’d get. The only image returned was the billboard above, but I was pretty happy with it. In fact, I was so happy with it that I used this very image in my last blog post. At the time, though, I was writing about what my colleagues and I had been doing in regards to the new research data initiative since the beginning of 2017, so I simply used it as a visual to make my coworkers laugh. However, I reminded myself to revisit and investigate. Plus, although I bartended for many years during grad school, I’d never made (much less heard of) a Pink Squirrel cocktail. Drawing inspiration from our friends in Rubenstein Library that write for “The Devil’s Tales” in the “Rubenstein Library Test Kitchen” category, I thought I’d not only write about what I learned, but also try to recreate it.

This item comes from the “Outdoor Advertising Association of America (OAAA) Archives, 1885-1990s” digital collection, which includes over 16,000 images of outdoor advertisements and other scenes. It is one of a few digital outdoor advertising collections that we have, as were previously written about here.

This digital collection houses 6 Glenmore Distilleries Company billboard images in total. 2 are for liquors (a bourbon and a gin), and 4 are for “ready-to-pour” Glenmore cocktails.

These signs indicate that Glenmore Distilleries Company created a total of 14 ready-to-pour cocktails. I found a New York Times article from August 19, 1965 in our catalog stating that Glenmore Distilleries Co. had expanded its line to 18 drinks, which means that the billboards in our collection have to pre-date 1965. Its president, Frank Thompson Jr., was quoted as saying that he expected “exotic drinks” to account for any future surge in sales of bottled cocktails.

OK, so I learned that Glenmore Distilleries had bottled a drink called a Pink Squirrel sometime before 1965. Next, I needed to research to figure out about the Pink Squirrel. Had Glenmore created it? What was in it? Why was it PINK?

It appears the Pink Squirrel was quite popular in its day and has risen and fallen in the decades since. I couldn’t find a definitive academic source, but if one trusts Wikipedia, the Pink Squirrel was first created at Bryant’s Cocktail Lounge in Milwaukee, Wisconsin. The establishment still exists, and its website states the original bartender, Bryant Sharp, is credited with inventing the Pink Squirrel (also the Blue Tail Fly and the Banshee, if you’re interested in cocktails). Wikipedia lists 15 popular culture references for the drink, many from 90s sitcoms (I’m a child of the 80s but don’t remember this) and other more current references. I also found an online source saying it was popular on the New York cocktail scene in the late 70s and early 80s (maybe?). Our Duke catalog returns some results, as well, including articles from Saveur (2014), New York Times Magazine (2006), Restaurant Hospitality (1990), and Cosmopolitan (1981). These are mostly variations on the recipe, including cocktails made with cream, a cocktail made with ice cream (Saveur says “blender drinks” are a cherished tradition in Wisconsin), a pie(!), and a cheesecake(!!).

Armed with recipes for the cream-based and the ice cream-based cocktails, I figured I was all set to shop for ingredients and make the drinks. However, I quickly discovered that one of the three ingredients, crème de noyaux, is a liqueur that is not made in large quantities by many companies anymore, and proved impossible to find around the Triangle. However, it’s an important ingredient in this drink, not only for its nutty flavor, but also because it’s what gives it its pink hue (and obviously its name!). Determined to make this work, I decided to search to see if I could come up with a good enough alternative. I started with the Duke catalog, as all good library folk do, but with very little luck, I turned back to Google. This led me to another Wikipedia article for crème de noyaux, which suggested substituting Amaretto and some red food coloring. It also directed me to an interesting blog about none other than crème de noyaux, the Pink Squirrel, Bryant’s Cocktail Lounge, and a recipe from 1910 on how to make crème de noyaux. However, with time against me, I chose to sub Amaretto and red food coloring instead of making the 1910 homemade version.

First up was the cream based cocktail. The drink contains 1.5 ounces of heavy cream, .75 ounces of white crème de cacao, and .75 ounces of crème de noyaux (or Amaretto with a drop of red food coloring), and is served up in a martini glass.

The result was a creamy, chocolatey flavor with a slight nuttiness, and just enough sweetness without being overbearing. The ice cream version substitutes the heavy cream for a half a cup of vanilla ice cream and is blended rather than shaken. It had a thicker consistency and was much sweeter. My fellow taster and I definitely preferred the cream version. In fact, don’t be surprised if you see me around with a pink martini in hand sometime in the near future.