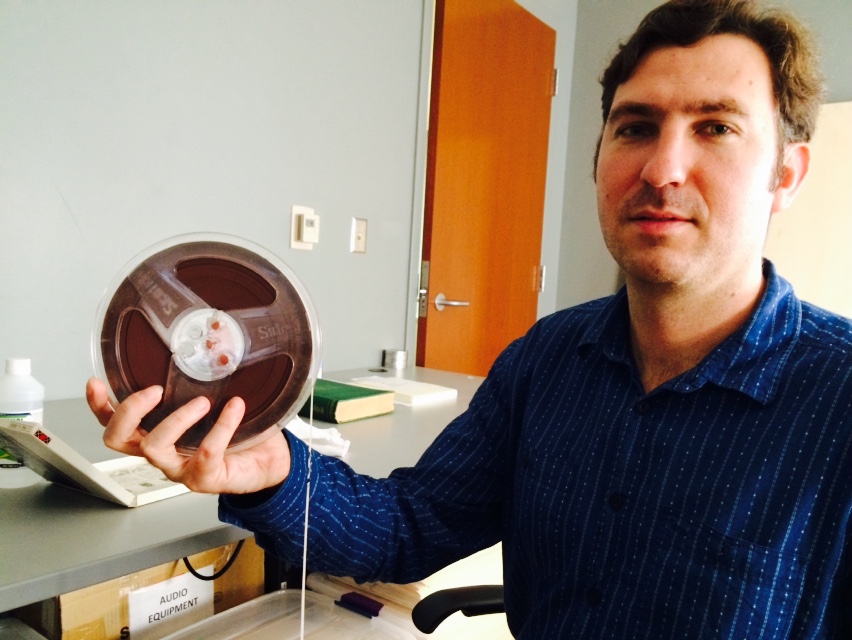

The Digital Production Center engages with various departments within the Libraries and across campus to preserve endangered media and create unique digital collections. We work especially closely with The Rubenstein Rare Book, Manuscript, & Special Collections Library, as they hold many of the materials that we digitize and archive on a daily basis. This collaboration requires a shared understanding of numerous media types and their special characteristics; awareness of potential conservation and preservation issues; and a working knowledge of digitization processes, logistics, and limitations.

In order to facilitate this ongoing collaboration, we recently did a semester-long cross-training course with The Rubenstein’s Reproductions Manager, Megan O’Connell. Megan is one of our main points of contact for weekly patron requests, and we felt that this training would strengthen our ability to navigate tricky and time-sensitive digitization jobs heading into the future. The plan was for Megan to work with all three of our digitization specialists (audio, video, & still image) to get a combination of hands-on and observational learning opportunities.

Still image comprises the bulk of our workload, so we decided to spend most of the training on these materials. “Still image” includes anything that we digitize via photographic or scanning technology, e.g. manuscripts, maps, bound periodicals, posters, photographs, slides, etc. We identified a group of uniquely challenging materials of this type and digitized one of each for hands-on training, including:

- Bound manuscript – Most of these items cannot be opened more than 90 degrees. We stabilize them in a custom-built book cradle, capture the recto sides of the pages, then flip the book and capture the verso sides. The resulting files then have to be interleaved into the correct sequence.

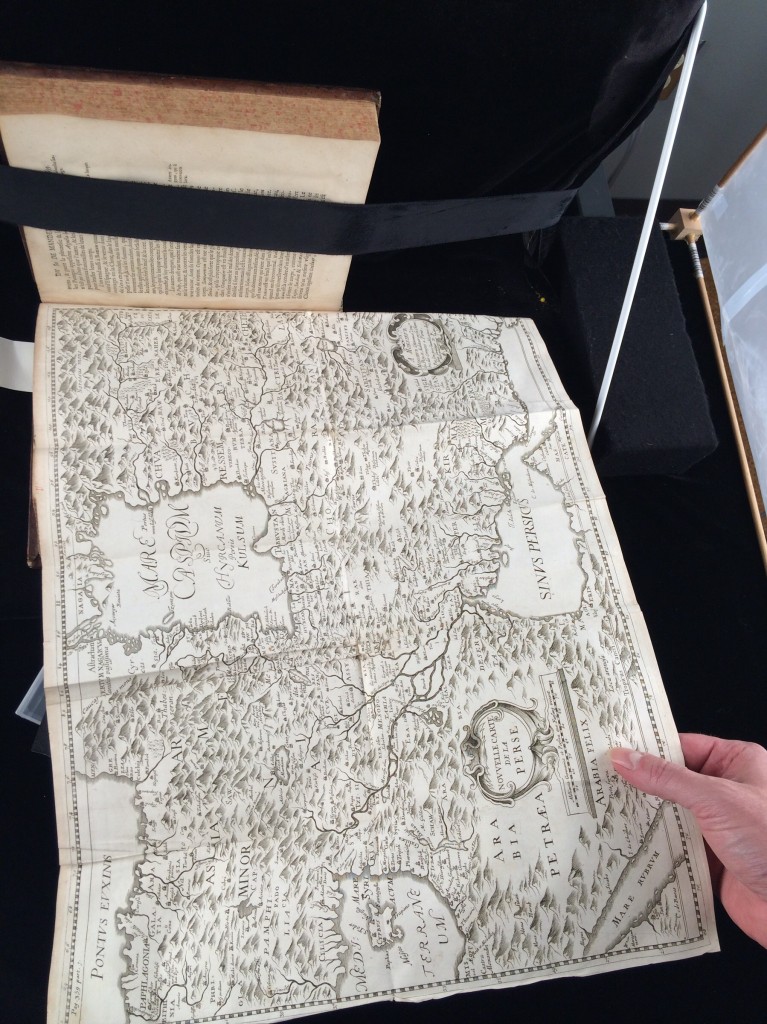

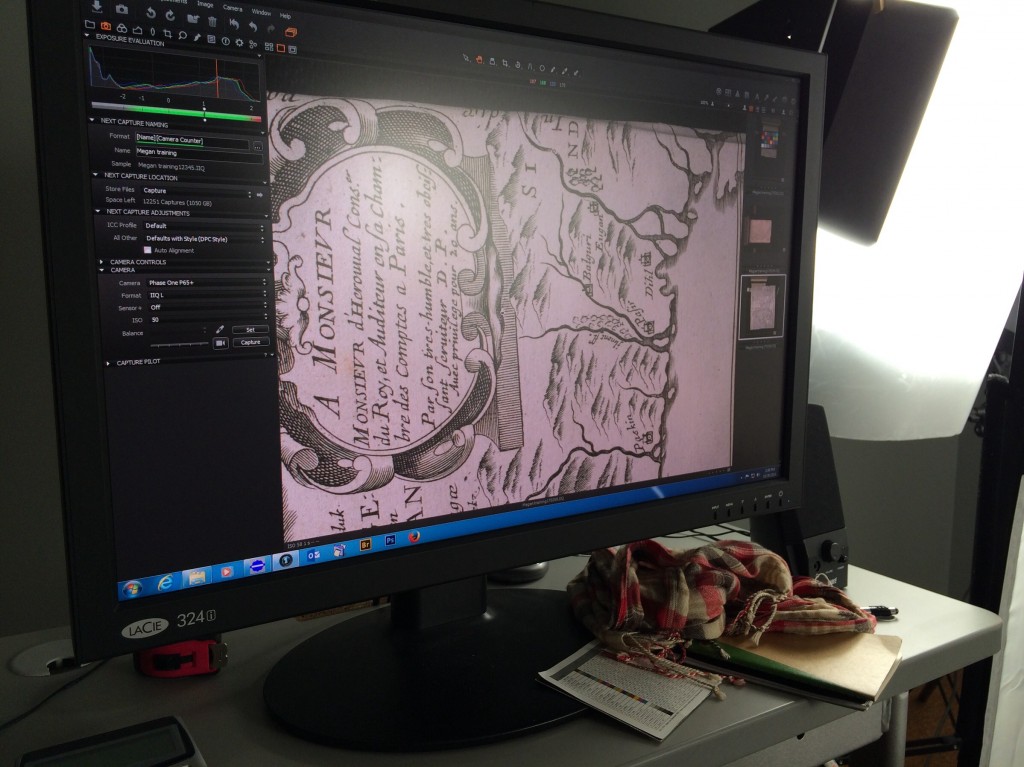

- Map, or other oversize item – These types of materials are often too large to capture in one single camera shot. Our setup allows us to take multiple shots (with the help of the camera being mounted on a sliding track) which we then stitch together into a seamless whole.

- Item with texture or different item depths, e.g. a folded map, tipped into a book – It is often challenging to properly support these items and level the map so that it is all in focus within the camera’s depth of field.

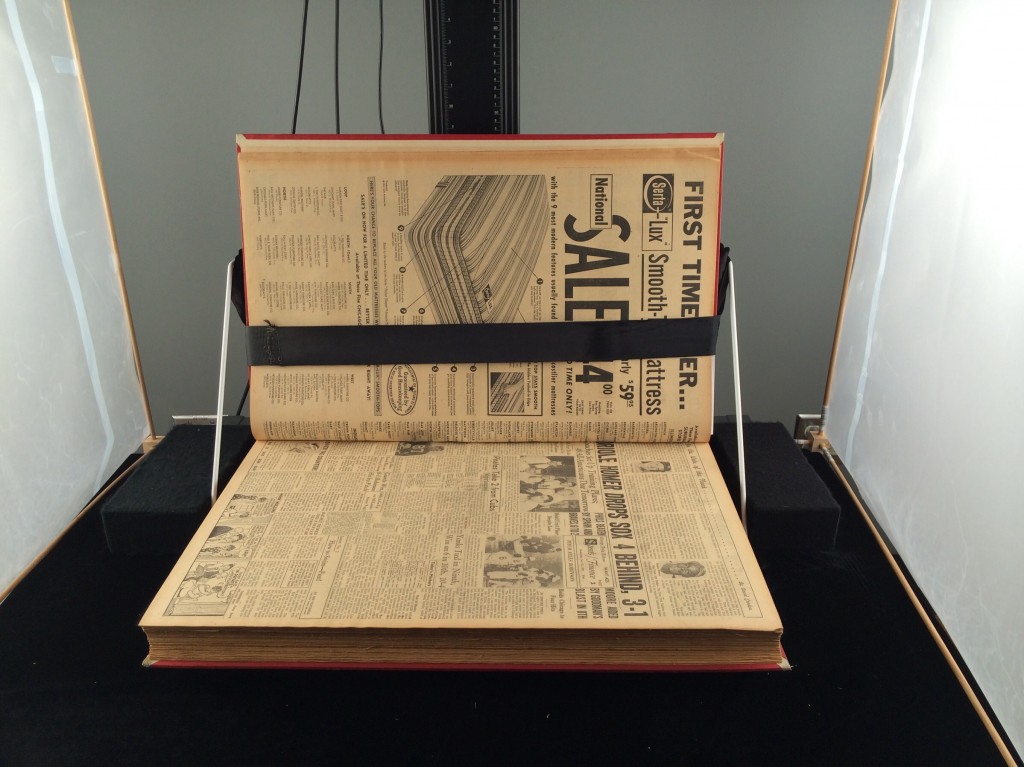

- ANR volume – These are large, heavy volumes that typically contain older newspapers and periodicals. The paper can be very fragile and they have to be handled and supported carefully so as not to damage or tear the material.

- Item with a tight binding w/ text that goes into the gutter – We do our best to capture all of the text, but it will sometimes appear to curve or disappear into the gutter in the resulting digital image.

Working through this list with Megan, I was struck by the diversity of materials that we collect and digitize. The training process also highlighted the variety of tricks, techniques, and hacks that we employ to get the best possible digital transfers, given the limitations of the available technology and the materials’ condition. I came out of the experience with a renewed appreciation of the complexity of the digitization work we do in the DPC, the significance of the rare materials in the collection, and the excellent service that we are able to provide to researchers through the Rubenstein Library.

Check out Megan’s blog post on the Devil’s Tale for more on the other media formats I wasn’t able to cover in the scope of this post.

Here’s to more collaboration across boundaries in the New Year!