The questions asked in the U.S. Census have changed over time to reflect both the data collecting needs of federal agencies and evolving societal norms. Census geographies have also evolved in this time period to reflect population change and shifting administrative boundaries in the United States.

Attempts to Provide Standardized Data

For the researcher who needs to compare demographic and socioeconomic data over time, this variability in data and geography can be problematic. Various data providers have attempted to harmonize questions and to generate standard geographies using algorithms that allow for comparisons over time. Some of the projects mentioned in this post have used sophisticated weighting techniques to make more accurate estimates. See, for instance, some of the NHGIS documentation on standardizing data from 1990 and from 2000 to 2010 geography.

NHGIS

The NHGIS Time Series Tables link census summary statistics across time and may require two types of integration: attribute integration, ensuring that the measured characteristics in a time series are comparable across time, and geographic integration, ensuring that the areas summarized by time series are comparable across time.

For attribute integration, NHGIS often uses “nominally integrated tables,” where the aggregated data is presented as it was compiled. For instance, comparing “Durham County” data from 1960 and 2000 based on the common name of the county.

For geographically standardized tables, when data from one year is aggregated to geographic areas from another year, NHGIS provides documentation with details on the weighting algorithms they use:

NHGIS has resolved discrepancies in the electronic boundary files, as they illustrate here (an area of Cincinnati).

Social Explorer

The Social Explorer Comparability Data is similar to the NHGIS Time Series Tables, but with more of a drill-down consumer interface. (Go to Tables and scroll down to the Comparability Data.) Only 2000 to 2010 data are available at the state, county, and census tract level. It provides data reallocated from the 2000 U.S. decennial census to the 2010 geographies, so you can get the earlier data in 2010 geographies for better comparison with 2010 data.

LTDB

The Longitudinal Tract Database (LTDB) developed at Brown University provides normalized boundaries at the census tract level for 1970-2010. Question coverage over time varies. The documentation for the project are available online:

-

- Project overview

- Web interface

- Article on methodology (mentioned further below)

- LTDB tools (input your own data; use 2010 data)

NC State has translated this data into ArcGIS geodatabase format. They provide a README file, a codebook, and the geodatabase available for download.

Do-It-Yourself

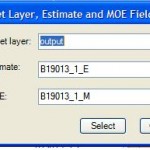

If you need to normalize data that isn’t yet available this way, GIS software may be able to help. Using intersection and re-combining techniques, this software may be able to generate estimates of older data in more recent geographies. In ArcGIS, this involves setting the ratio policy when creating a feature layer, to allow apportioning numeric values in attributes among the various overlapping geographies. This involves an assumption of an even geographic distribution of the variable across the entire area (which is not as sophisticated as some of the algorithms used by groups such as NHGIS).

Another research strategy employs crosswalks to harmonize census data over time. Crosswalks are tables that let you proportionally assign data from one year to another or to re-aggregate from one type of geography to another. Some of these are provided by the NHGIS geographic crosswalk files, the Census Bureau’s geographic relationship files, and the Geocorr utility from the Missouri Census Data Center.

You can contact CDVS at askdata@duke.edu to inquire about the options for your project.