Post by Hannah Rozear, Librarian for Biological Sciences and Global Health, and Sarah Park, Librarian for Engineering and Computer Science

Unless you’ve been living under a rock, you’ve heard the buzz about ChatGPT. It can write papers! Debug code! Do your laundry! Create websites from thin air! While it is an exciting tech development with enormous possibilities for applications, understanding what’s under the hood and what it does well/not-so-well is critically important.

ChatGPT is an Artificial Intelligence Chatbot developed by OpenAI and launched for public use in November 2022. While other AI chatbots are also in development by tech giants such as Google, Apple, and Microsoft, OpenAI’s early rollout has eclipsed the others for now – with the site reaching more than 100 million users in 2 months. For some perspective, this is faster widespread adoption than TikTok, Instagram, and many other popular apps.

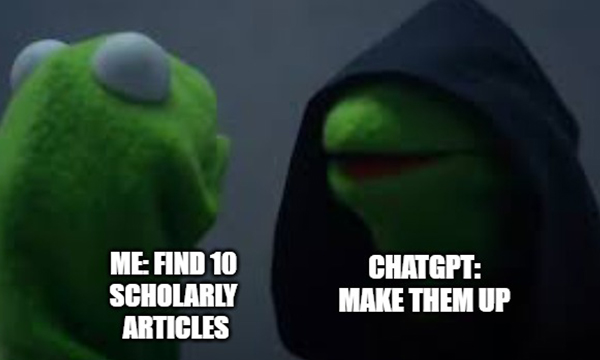

What you may not know about ChatGPT is that it has significant limitations as a reliable research assistant. One such limitation is that it has been known to fabricate or “hallucinate” (in machine learning terms) citations. These citations may sound legitimate and scholarly, but they are not real. It is important to note that AI can confidently generate responses without backing data much like a person under the influence of hallucinations can speak confidently without proper reasoning. If you try to find these sources through Google or the library—you will turn up NOTHING.

Why does it do this? ChatGPT is built on a Large Language Model and has been trained on a huge dataset of internet sources. It can quickly and simply generate easy-to-understand responses to any question you throw at it. But the responses are only as good as the quality of input data it has been trained on. Its core strength lies in recognizing language patterns—not in reading and analyzing lengthy scholarly texts. Given that, it may not be the most reliable source for in-depth research. The following is a shortlist of what we’ve observed ChatGPT is good for and not good for.

What It’s Good For

- Generating ideas for related concepts, terms, and words about a particular topic. I asked ChatGPT, what are some keywords for the topic of AI literacy? It replied with: Artificial Intelligence (AI), Machine Learning (ML), Deep Learning, Neural Networks, Natural Language Processing (NLP), Robotics, Data Science, Big Data, Predictive Analytics, Ethics of AI, Bias in AI, Explainable AI, Human-AI Interaction, Cognitive Computing… These are all great leads for terms I might use to look for articles and books on this topic.

- Suggestions for databases where I could find literature on the topic. I asked ChatGPT, What are some good library databases I could search to find more information about the topic of AI literacy? ChatGPT replied with: IEEE Xplore, ACM Digital Library, ScienceDirect, JSTOR, Proquest, arXiv, and Web of Science. It also suggested checking with my library to see what’s available. A more direct route to this type of question would be consulting the Duke Libraries Research Guides and/or connecting with the Subject Specialist at Duke who is familiar with the resources we have available on any given topic.

- Suggestions for improving writing. As ChatGPT has been trained on a large corpus of text, it has accumulated a range of dictions and writing variations within context. I have found it particularly useful for checking grammar and sentence structure in American English, as well as for suggesting alternative phrasing, synonyms, or quick translations of my writing into another language. Additionally, I have experimented with asking ChatGPT to rewrite my paragraph, but if it produced an unexpected response, it may indicate that my writing contains parts that do not make sense in that particular language. Nonetheless, it is important to thoroughly review the text and ensure that it meets your criteria before taking it.

What It’s NOT Good For

- DO NOT ask ChatGPT for a list of sources on a particular topic! ChatGPT is based on a Large Language Model and does not have the ability to match relevant sources to any given topic. It may do OK with some topics or sources, but it may also fabricate sources that don’t exist.

- Be wary of asking ChatGPT to summarize a particular source, or write your literature review. It may be tempting to ask ChatGPT to summarize the main points of the dense and technical 10-page article you have to read for class, or to write a literature review synthesizing a field of research. Depending on the topic and availability of data it has on that topic, it may summarize the wrong source or provide inaccurate summaries of specific articles—sometimes making up details and conclusions.

- Do not expect ChatGPT to know current events or predict the future. ChatGPT’s “knowledge” is based on the dataset that was available before September 2021, and therefore, it may not be able to provide up-to-date information on current events or predict the future. For instance, when I asked about the latest book published by Haruki Murakami in the US, ChatGPT responded with First Person Singular, which was published in April 2021. However, the correct answer is Novelist as a Vocation, which was released in November 2022. Additionally, ChatGPT did not seem aware of any recent developments beyond September 2021. It’s worth noting that Murakami’s new novel is expected to be released in April 2023.

AI chat technology is rapidly evolving and it’s exciting to see where this will go. Much like Google and Wikipedia helped accelerate our access to information in their heyday, the existence of these new AI-based tools requires their users to think about how to carefully and ethically incorporate them into their own research and writing. If you have any doubts or questions, ask real human experts, such as the library’s Ask a Librarian chat, or schedule a one-on-one consultation with a librarian for help.

Resources

- Alkaissi, H., & McFarlane, S. I. (2023). Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus, 15(2), e35179. https://doi.org/10.7759/cureus.35179

- McMichael, Jonathan. (Jan. 20, 2023). Artificial Intelligence and the Research Paper: A Librarian’s Perspective. SMU Libraries.

- Learn more about AI Tech News on the Hard Fork podcast.

- Faculty and instructors: Consult with Duke Learning Innovation for ideas about incorporating AI literacy into your teaching.

Fake citations have a long–and somewhat useful–history in the info industry. Those of us old enough to remember when print was the only way to sell compilative forms of information, such as reference works, were told to include several bogus citations. Then, if someone stole your work and repackaged it, the phony citations would be irrefutable proof. Plus ça change.

Not only will Chat DPT invent realistic conclusions to theoretical questions, it uses the details of actual citations to document the incorrect information. Then, it lies about it. It even cites USPatents using patent numbers of completely irrelevant patents. I FOUND 20 OUT OF 20 CITED PATENTS OR ARTICLES WERE FICTITIOUS. Beware, this program is amazingly unreliable and deceptive.