Unless you’ve been actively ignoring what’s going on in the world of Technical Services, you’ve certainly heard about linked data at this point. You’ve heard of BIBFRAME, you’ve heard that it’s coming, you’ve heard that it will change the library landscape, and you may have even taken a workshop on writing some triples. But what actually is linked data?

First, some brief (I promise) historical context on linked data. In 1989, Tim Berners-Lee had the idea of combining hypertext with existing internet transmission technology, essentially inventing the World Wide Web. Once HTML was developed shortly after, websites were able to embed links to other sites inside of them, linking documents together. The technologies have gotten a bit more sophisticated since then, but essentially this is still how most people use the Internet: they put an address into their browser, and then click the links to move between pages.

In 2006, Tim Berners-Lee began to work on what it would look like if our data on the Internet were linked together like our documents were. If your data references information about the state of North Carolina, instead of storing it you could link to an authoritative source that contains data about North Carolina, most likely much more data than you would have stored. He laid out some principles for best practices, but conceptually, that’s it — instead of storing data about things, store links to authoritative sources of data about those things.

An Evolving Model

Understanding what linked data is not can be a good place to start when trying to understand what it is. To help with this, I want to look at the layers of standards we use to catalog materials. Some definitions:

Conceptual model: An abstract way of looking at the entities of the bibliographic universe. It has no direct effect on the records we create, but instead it informs the content standards we create.

Content Standard: A set of rules that dictates what data we record and the manner in which they are recorded. A content standard answers the questions of what the entities, attributes and relationships are; what is recorded and what is transcribed; and what is mandatory or optional.

Data structure: The digital format that serves as a carrier for data. Follow the rules of the content standard to fill out the data structure.

The Old Model

This is the basis of our modern cataloging structure. For our data structure, we have good ol’ MARC. Created in the 1960s and adopted in the 1970s, its original purpose was to do two things: transmit catalog cards between libraries and facilitate the printing of those cards. MARC was able to be searched by ‘experts,’ but we’re talking about the 70s here. People who used computers to do this kind of work were, by definition, experts. We’ve repurposed MARC to do all sorts of things, from tracking our orders to creating exports for our discovery layer. Around the same time as MARC, we had AACR, the Anglo American Cataloging Rules, created to standardize how libraries created catalogs and catalog records. MARC was our data structure, and AACR was our content standard. MARC says we have a 245 field, with two single-digit indicators and different subfields. AACR says this is what a title proper is, this is where you find it on a book, and this is where to put the information into these different subfields of the MARC 245.

MARC and AACR work well together because they were designed with a unifying product in mind: a printed catalog card. Saving space was paramount — how much can we fit onto a 3×5 card before it’s too busy and unusable? As we’ve moved away from the print card catalog, we’ve started to adapt our models as well.

The Current Model

We’re still on MARC, but the rest have changed. To begin with, we see the introduction of a conceptual model, Functional Requirements for Bibliographic Records (FRBR). We don’t use FRBR to catalog per se, but it informed the development and understanding of our new content standard, Resource Description and Access (RDA). RDA updated how the entities, attributes, and relationships were defined and identified, and provided mappings to MARC.

MARC and RDA don’t really work all that well together. MARC is a very flat record structure. It takes all of the data and shoves it into one record, while RDA is all about splitting different levels of description. Romeo and Juliet, the work, is written by William Shakespeare, regardless of the publication date of the manifestation.

The Proposed Model

And that brings us to finally mentioning linked data. The model that we expect to be coming will update FRBR with the newly released Library Reference Model (LRM) with some mostly minor changes, and replace MARC with BIBFRAME, a data structure that is stored in a linked data format built for bibliographic data.

This has all been a very roundabout way of saying that moving linked data doesn’t really change RDA at all. All of those cataloging skills of identifying different types of titles (RDA has around 20 types of title), assigning subject headings, and figuring out if the book you’re looking at is the same as the record you’re looking at don’t really change. Eventually the changes made from FRBR to the LRM will inform some updates to future versions of RDA, but that has nothing to do with BIBFRAME or linked data. The changes only flow down.

Semantic Technologies

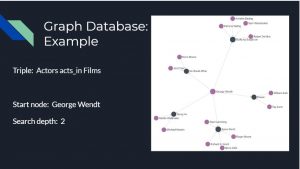

Linked data is a combination of several technologies that work together to help execute Tim Berners-Lee’s vision of a semantic web. Let’s start with graph databases. In a traditional relational database, like Microsoft Access, data are stored in tables and linked together by having the primary key of one record in Table A appear as a foreign key in a record in Table B. In a graph database, data are stored as nodes and edges, where every data point is a node and the connections between them is an edge.

Graph databases are where we start to see the concept of a triple play out. They’re great for when relationships are complex and not uniform.

Next we have ontologies. An ontology is a naming and definition of the categories, properties, and relations between the entities, data, and concepts that make up one domain of knowledge (taken from Wikipedia). Let’s look at an example.

Say I wanted to make one ontology to describe a single volume monograph, or book, and one to describe a piece of two-dimensional visual art. Here are two small ontologies I might design:

Now, let’s say I want to create an ontology for comic books. I don’t need to start from scratch, I can use existing elements in my new ontology and only create new elements when they don’t already exist. I can include a prefix to show which ontology a certain element is defined in.

BIBFRAME is an ontology. It lays out what the entities and attributes are and how they are all allowed to connect to each other. It’s replacing the MARC layer of our existing technology.

The next technology that goes into linked data is the triplestore. A triplestore is a type of graph database designed to store RDF triples. It is a piece of software that enables all of the normal database functionality you’d expect, like complex searching and fast retrieval. That’s all most of us need to know, which is very fortunate because understanding how they work on a deeper level is some pretty heavy duty PhD level computer science.

All of this brings us to our last piece, RDF, or Resource Description Framework. RDF is a metadata model that stores data in triples. As a model, it’s fairly bare bones. Every piece of data is either a class or a property, and triples follow the pattern of ‘class’ ‘property’ ‘class.’ Here’s a very basic example:

Our two classes are the name of the hotel and the address, and the property is the relationship between them. I made up an ‘omni’ ontology to help illustrate how you might model this.

In reality, things get a little more complex:

Here, I’ve split out the different elements of the address into its individual parts and grouped them all onto an empty node that represents the whole of the address. I’ve called it ‘OWPHAddress.’

And then things get even more complex:

Here, I’ve broken it out a few different ways. First, I’ve replaced the hotel name and address nodes with URIs. In RDF, every node is either a URI or a literal, which is an actual string that represents the value. The URI is just a string that uniquely identifies the entity, just like the 001 of a bib record does. I’ve also replaced the literals for city and state with URI out to Geonames, a triplestore that exists on the Internet and manages geographic data.

What does this let us do? This is just how I modeled an address of a hotel. If there is a restaurant inside of that hotel, I could attach it to that same address node, meaning I don’t need to duplicate the data anywhere. If you translate this to describing bibliographic resources, then multiply by a few million to scale up to our collection, you have a dataset of hundreds of millions of triples that represent all of the items Duke owns.

Conclusion

Linked data is a very technical concept, which can make it difficult to accurately talk about, both within our community and with other communities. I didn’t really go into the question of why we want to move to linked data, because I think that discussion relies on understanding what it actually is. I hope this helps as a bit of a primer for what is going on under the hood for linked data, and please feel free to get in touch if you have any questions or thoughts you want to share.