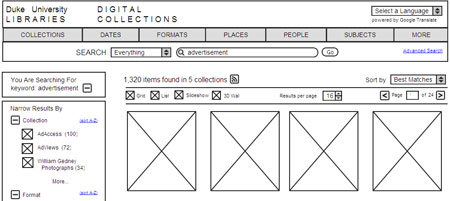

As we continue our redesign, we’re getting some really helpful feedback on our mockups for item pages. By all means, keep it coming! Here are some new prototypes for our search results screens, as well as our analysis of our current search results and examples of others systems we like. What do you think?

Prototypes

There are five examples here; some are searching across all collections and others are searching within a single collection. Particular areas of interest for us: location of the ‘Narrow by’ facets, display of results for matching digital collections or matching digital exhibits, collection branding & info.

Analysis

View this feedback (Search Results (Cross-Collection): Existing Interface) on Notable

Here’s what we have learned about our search interface from our various evaluation methods:

Web Analytics

- About 75% of searches are within-collection searches; 25% are cross-collection.

- The majority of searches are for various topics, though many users search for items from a particular decade (“1920s”), format (“advertisements”), or collection (“Gamble”).

- Some users attempt to retrieve every item possible through search (“*”, “all”, “a”)

Usability Tests (Spring 2008)

Continue reading Search Results: Prototypes, Analysis & Inspiration

Diamonstein-Spielvogel Video Archive Usage Stats

Diamonstein-Spielvogel Video Archive Usage Stats