Looking for something to keep you company on your Summer vacation? Why not direct your devices to a Duke Digital collections! Seriously! Here are a few of the compelling collections we debuted earlier this Spring, and we have have more coming in late June.

Hayti-Elizabeth Street renewal area

These maps and 2 volume report document Durham’s Hayti-Elizabeth st neighborhood infrastructure prior to the construction of the Durham Freeway, as well as the justifications for the redevelopment of the area. This is an excellent resource for folks studying Durham history and/or the urban renewal initiatives of the mid-20th century.

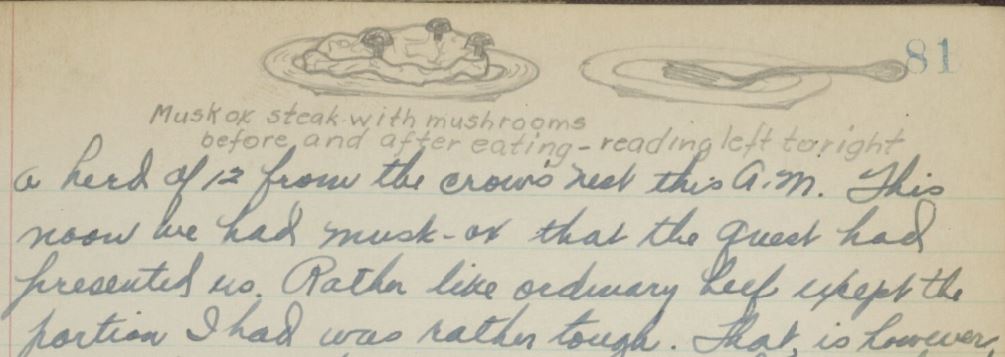

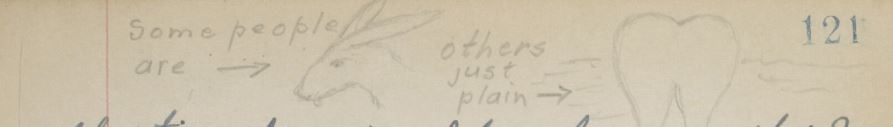

African American Soldiers’ Photography albums

We launched 8 collections of photograph albums created by African American soldiers serving in the military across the world including Japan, Vietnam and Iowa. Together these albums help “document the complexity of the African American military experience” (Bennett Carpenter from his blog post, “War in Black and White: African American Soldiers’ Photograph Albums”).

Sir Percy Moleworth Sykes Photograph Album

This photograph album contains pictures taken by Sir Percy Moleworth Sykes during his travels in a mountainous region of Central Asia, now the Xinjiang Uyghur Autonomous Region of China, with his sister, Ella Sykes. According to the collection guide, the album’s “images are large, crisp, and rich with detail, offering views of a remote area and its culture during tensions in the decades following the Russo-Turkish War”.

A Sidenote

Both the Hayti-Elizabeth and soldiers’ albums collections were proposed in response to our 2017 call for digitization proposals related to diversity and inclusion. Other collections in that batch include the Emma Goldman papers, Josephine Leary papers, and the ReImagining collection.

Coming soon

Our work never stops, and we have several large projects in the works that are scheduled to launch by the end of June. They are the first batch of video recordings from the Memory Project. We are busy migrating the incredible photographs from the Sydney Gamble collection – into the digital repository. Finally there is one last batch of Radio Haiti recordings on the way.

Keeping in touch

Keeping in touch

We launch new digital collections just about every quarter, and have been investigating new ways to promote our collections as part of an assessment project. We are thinking of starting a newsletter – would you subscribe? What other ways would you like to keep in touch with Duke Digital Collections? Post a comment or contact me directly.