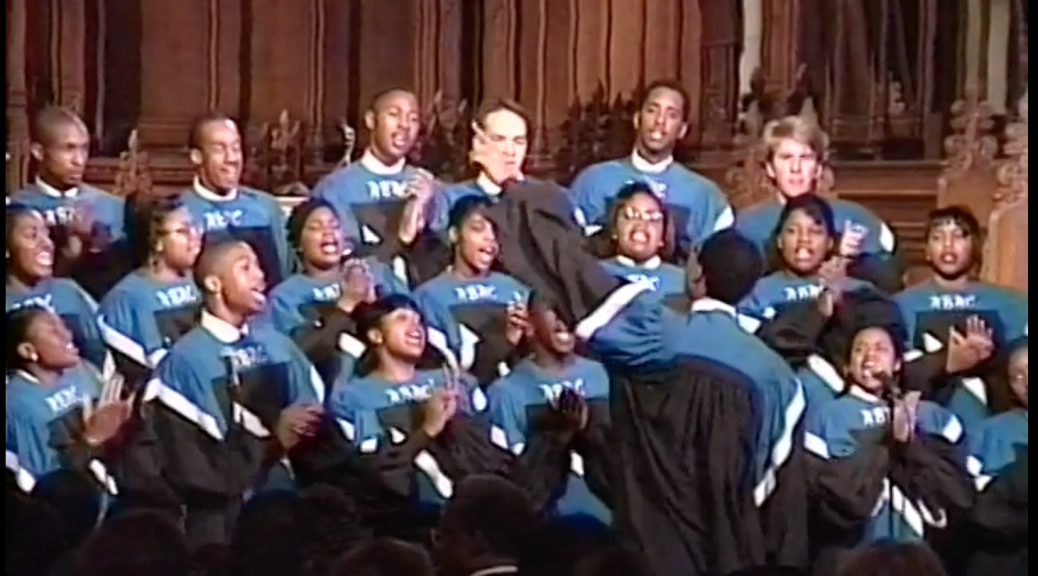

Over the course of 2017, we improved our capacity to support digital audiovisual materials in the Duke Digital Repository (DDR) by leaps and bounds. A little more than a year ago, I had written a Bitstreams blog post highlighting the new features we had just developed in the DDR to provide basic functionality for AV, especially in support of the Duke Chapel Recordings collection. What a difference a year makes.

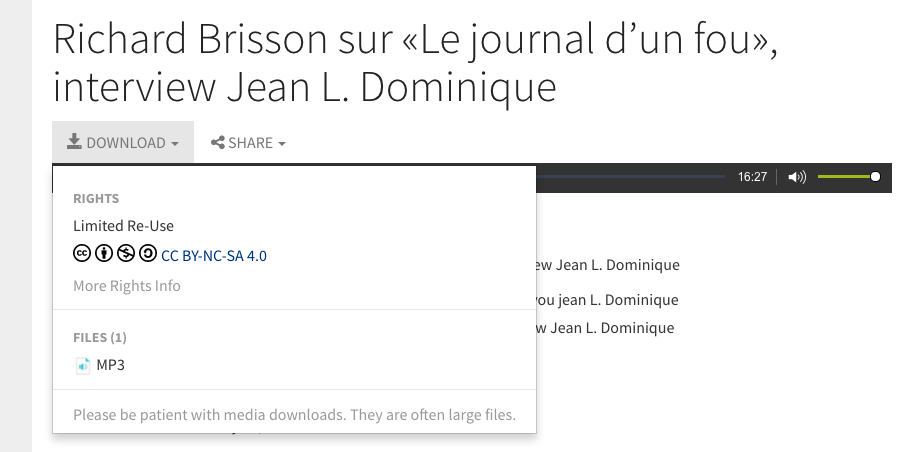

This past year brought renewed focus on AV development, as we worked to bring the NEH grant-funded Radio Haiti Archive online (launched in June). At the same time, our digital collections legacy platform migration efforts shifted toward moving our existing high-profile digital AV material into the repository.

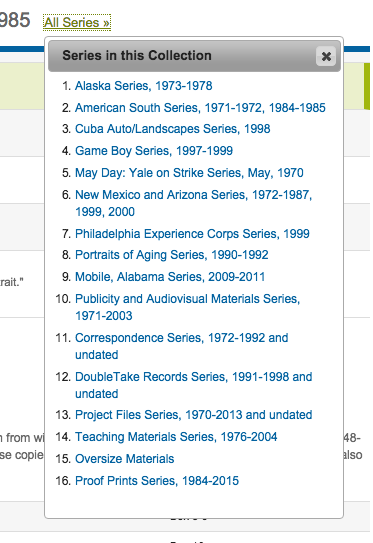

Closed Captions

At Duke University Libraries, we take accessibility seriously. We aim to include captions or transcripts for the audiovisual objects made available via the Duke Digital Repository, especially to ensure that the materials can be perceived and navigated by people with disabilities. For instance, work is well underway to create closed captions for all 1,400 items in the Duke Chapel Recordings project.

The DDR now accommodates modeling and ingest for caption files, and our AV player interface (powered by JW Player) presents a CC button whenever a caption file is available. Caption files are encoded using WebVTT, the modern W3C standard for associating timed text with HTML audio and video. WebVTT is structured so as to be machine-processable, while remaining lightweight enough to be reasonably read, created, or edited by a person. It’s a format that transcription vendors can provide. And given its endorsement by W3C, it should be a viable captioning format for a wide range of applications and devices for the foreseeable future.

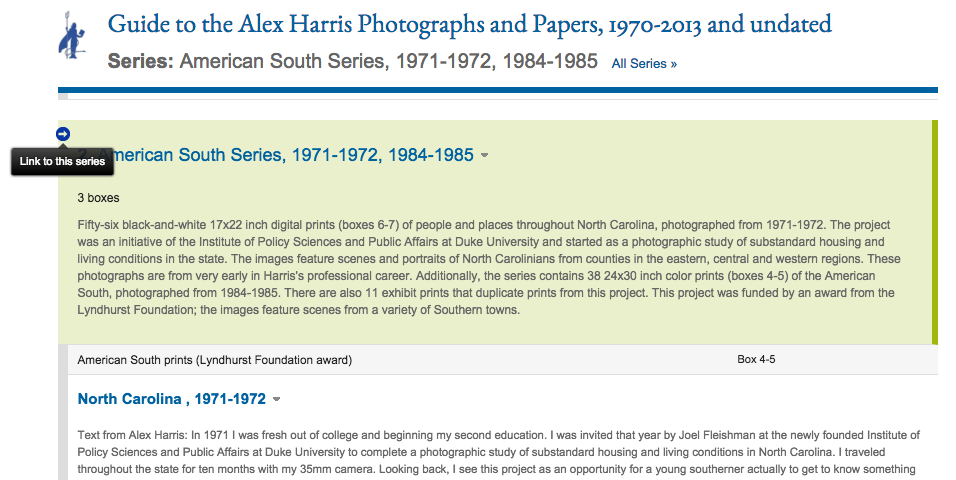

Interactive Transcripts

Displaying captions within the player UI is helpful, but it only gets us so far. For one, that doesn’t give a user a way to just read the caption text without requiring them to play the media. We also need to support captions for audio files, but unlike with video, the audio player doesn’t include enough real estate within itself to render the captions. There’s no room for them to appear.

So for both audio and video, our solution is to convert the WebVTT caption files on-the-fly into an interactive in-page transcript. Using the webvtt-ruby gem (developed by Coconut) , we parse the WebVTT text cues into Ruby objects, then render them back on the page as HTML. We then use the JWPlayer Javascript API to keep the media player and the HTML transcript in sync. Clicking on a transcript cue advances the player to the corresponding moment in the media, and the currently-playing cue gets highlighted as the media plays.

We also do some extra formatting when the WebVTT cues include voice tags (<v> tags), which can optionally indicate the name of the speaker (e.g., <v Jane Smith>). The in-page transcript is indexed by Google for search retrieval.

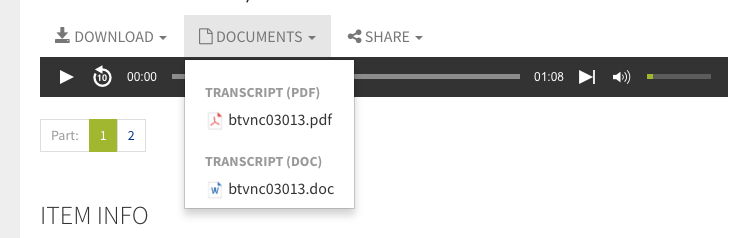

Transcript Documents

In many cases, especially for audio items, we may have only a PDF or other type of document with a transcript of a recording that isn’t structured or time-coded. Like captions, these documents are important for accessibility. We have developed support for displaying links to these documents near the media player. Look for some new collections using this feature to become available in early 2018.

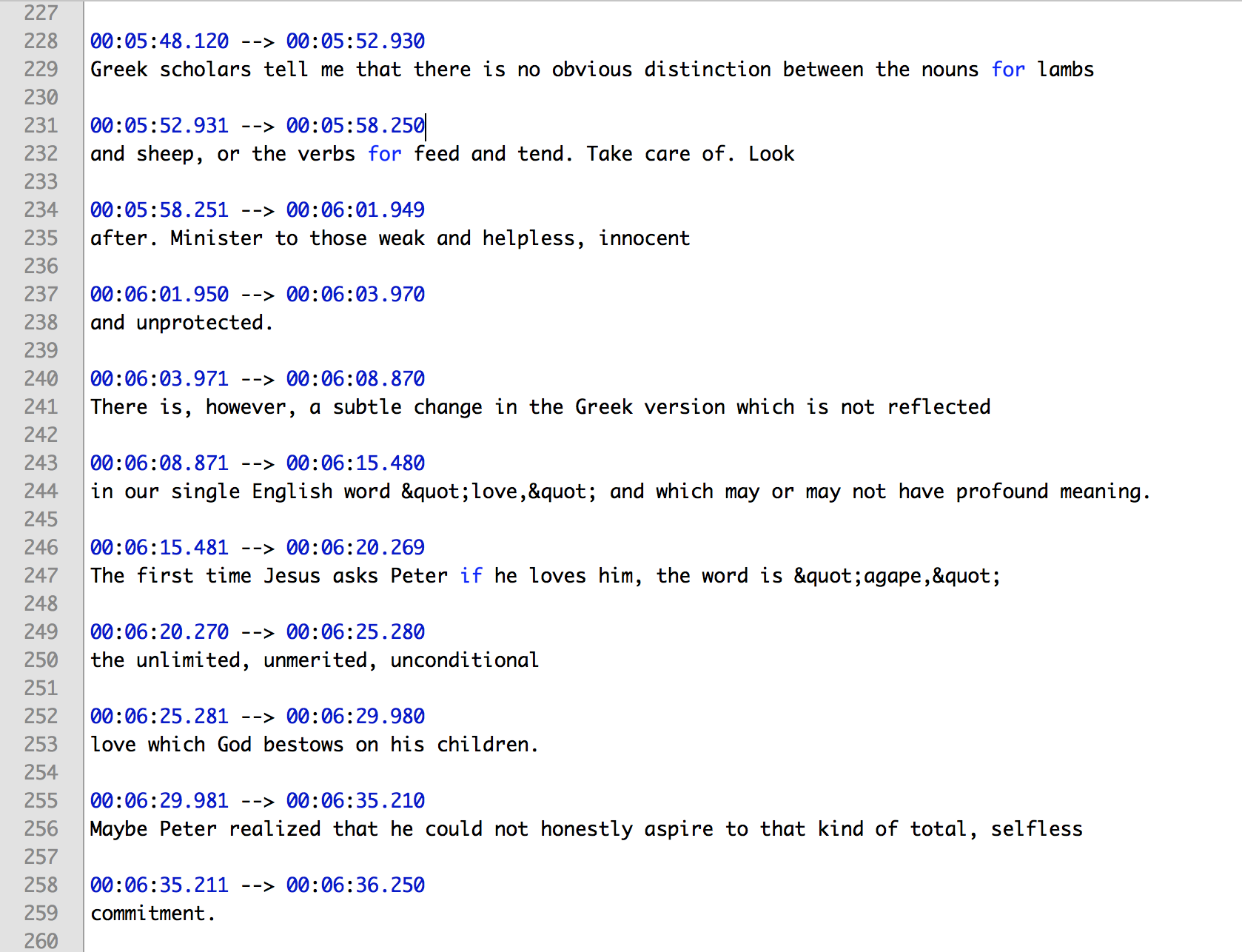

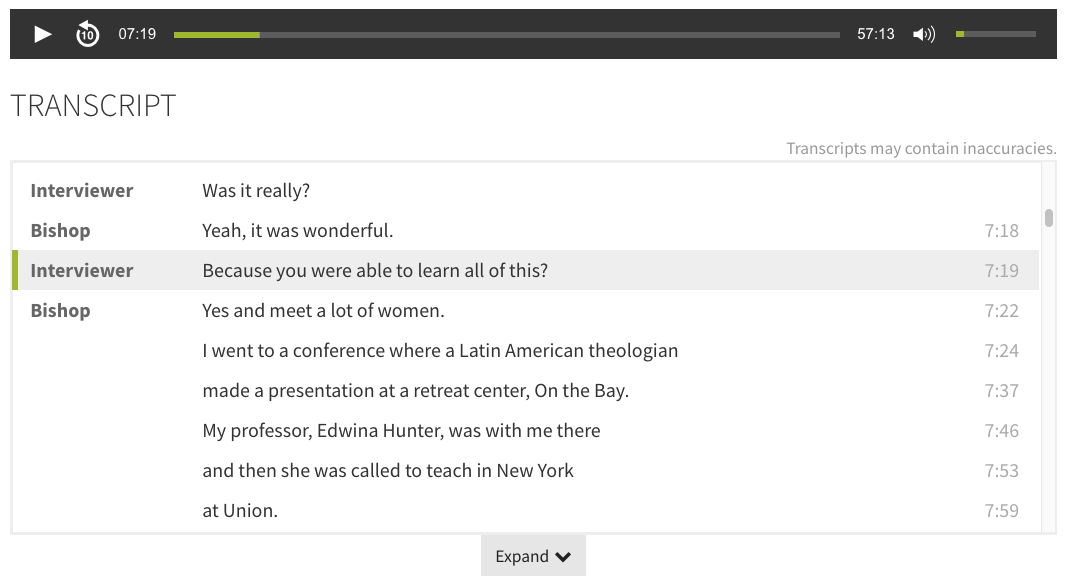

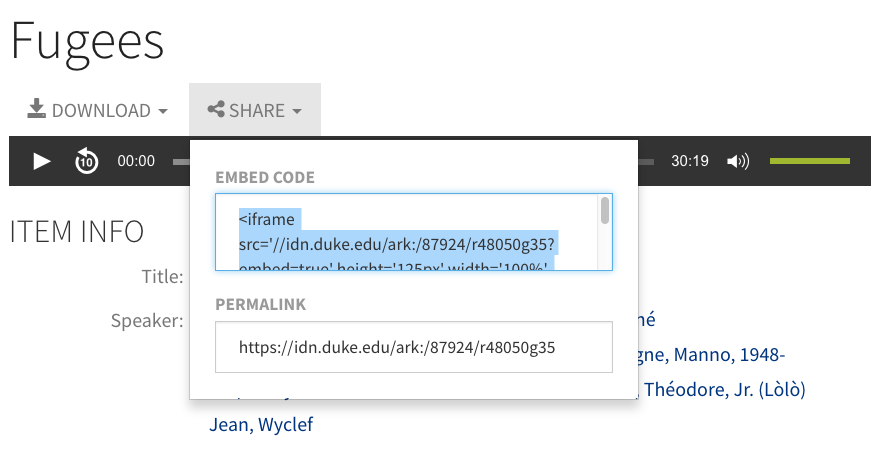

A/V Embedding

The DDR web interface provides an optimal viewing or listening experience for AV, but we also want to make it easy to present objects from the DDR on other websites, too. When used on other sites, we’d like the objects to include some metadata, a link to the DDR page, and proper attribution. To that end, we now have copyable <iframe> embed code available from the Share menu for AV items.

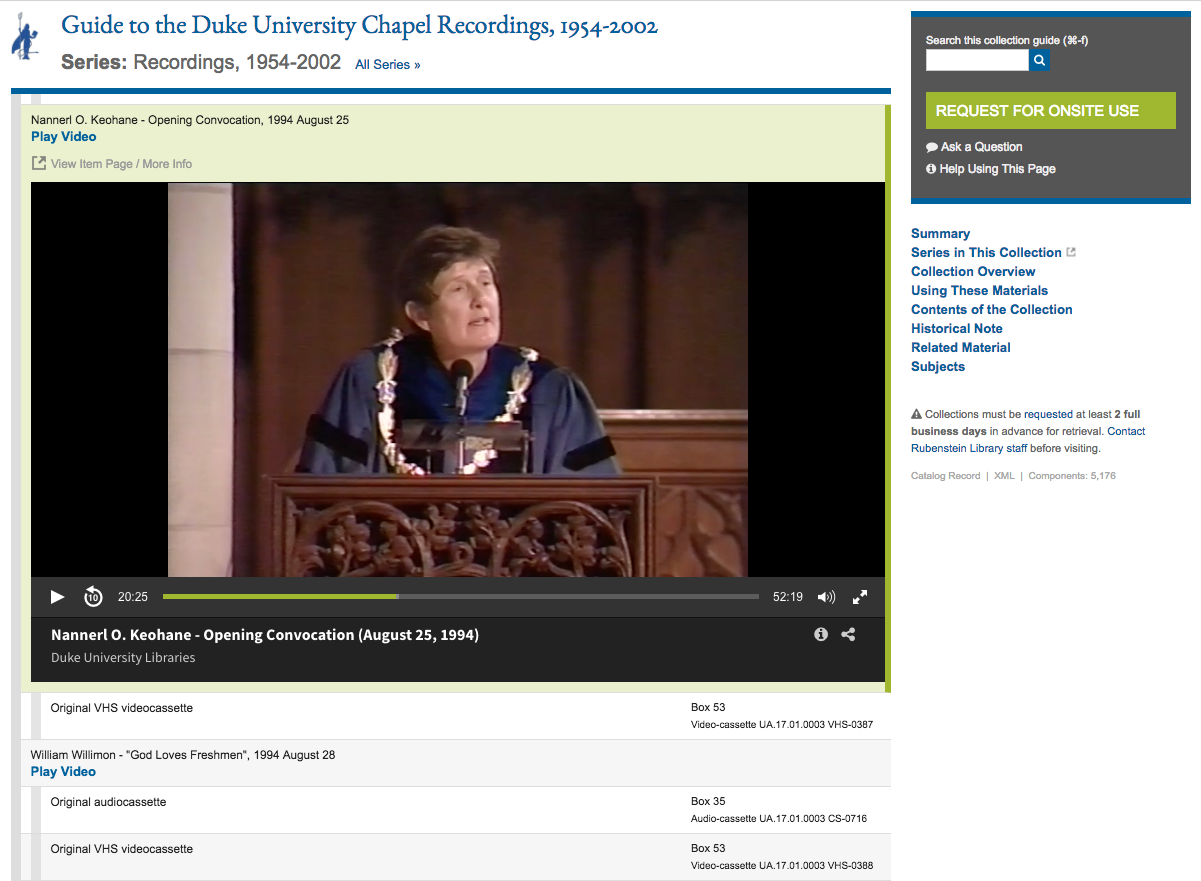

This embed code is also what we now use within the Rubenstein Library collection guides (finding aids) interface: it lets us present digital objects from the DDR directly from within a corresponding collection guide. So as a researcher browses the inventory of a physical archival collection, they can play the media inline without having to leave.

Sites@Duke Integration

If your website or blog is one of the thousands of WordPress sites hosted and supported by Sites@Duke — a service of Duke’s Office of Information Technology (OIT) — we have good news for you. You can now embed objects from the DDR using WordPress shortcode. Sites@Duke, like many content management systems, doesn’t allow authors to enter <iframe> tags, so shortcode is the only way to get embeddable media to render.

And More!

Here are the other AV-related features we have been able to develop in 2017:

- Access control: master files & derivatives alike can be protected so access is limited to only authorized users/groups

- Video thumbnail images: model, manage, and display

- Video poster frames: model, manage, and display

- Intermediate/mezzanine files: model and manage

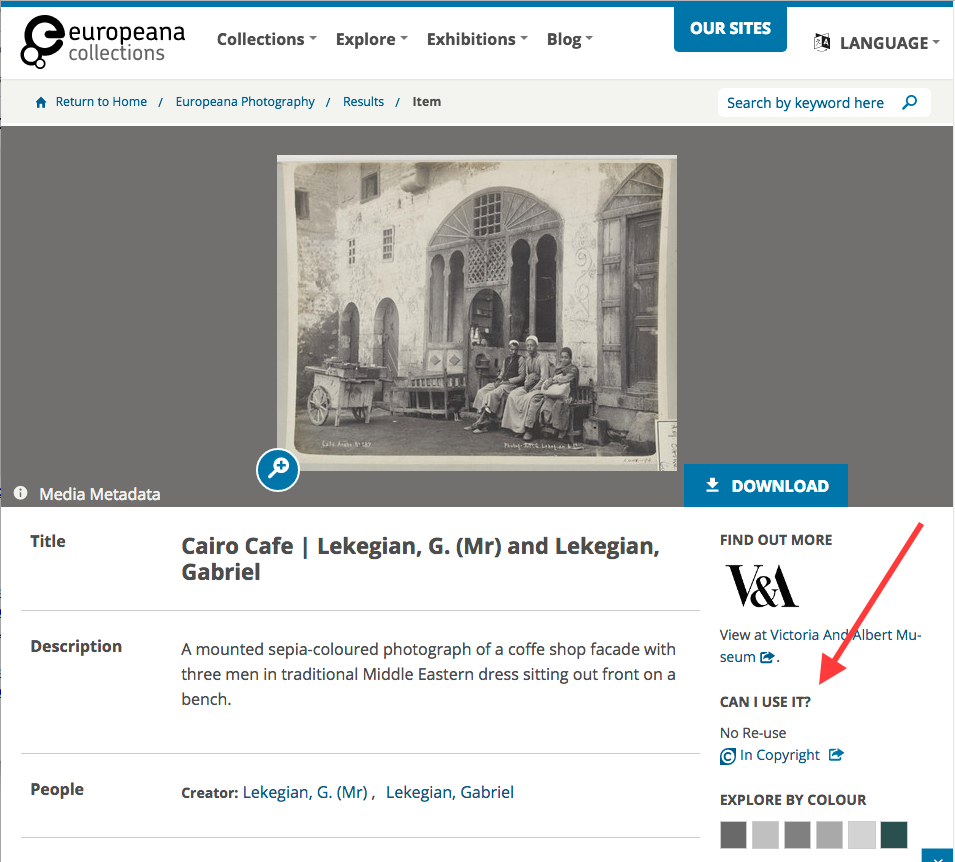

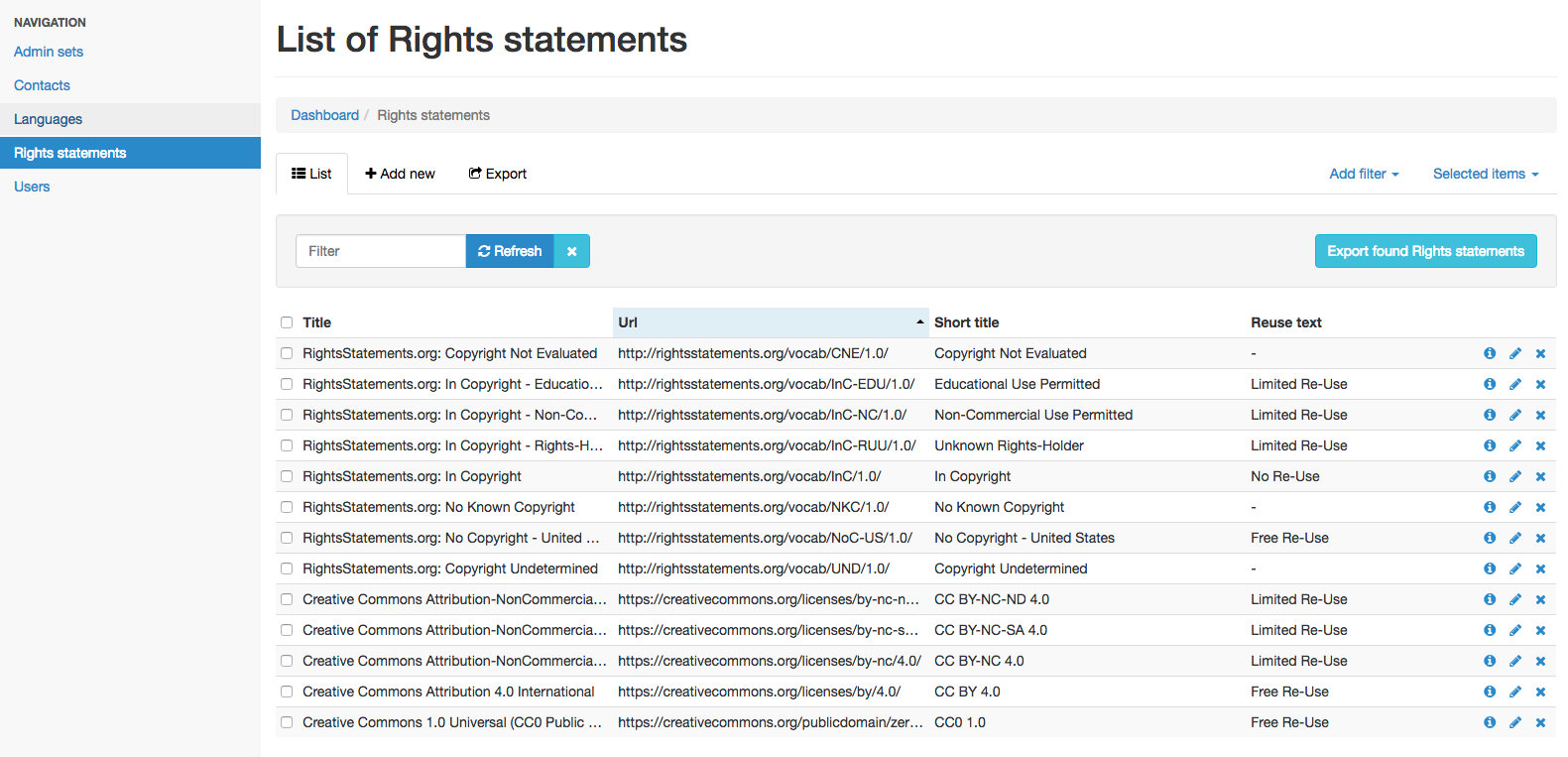

- Rights display: display icons and info from RightsStatements.org and Creative Commons, so it’s clear what users are permitted to do with media.

What’s Next

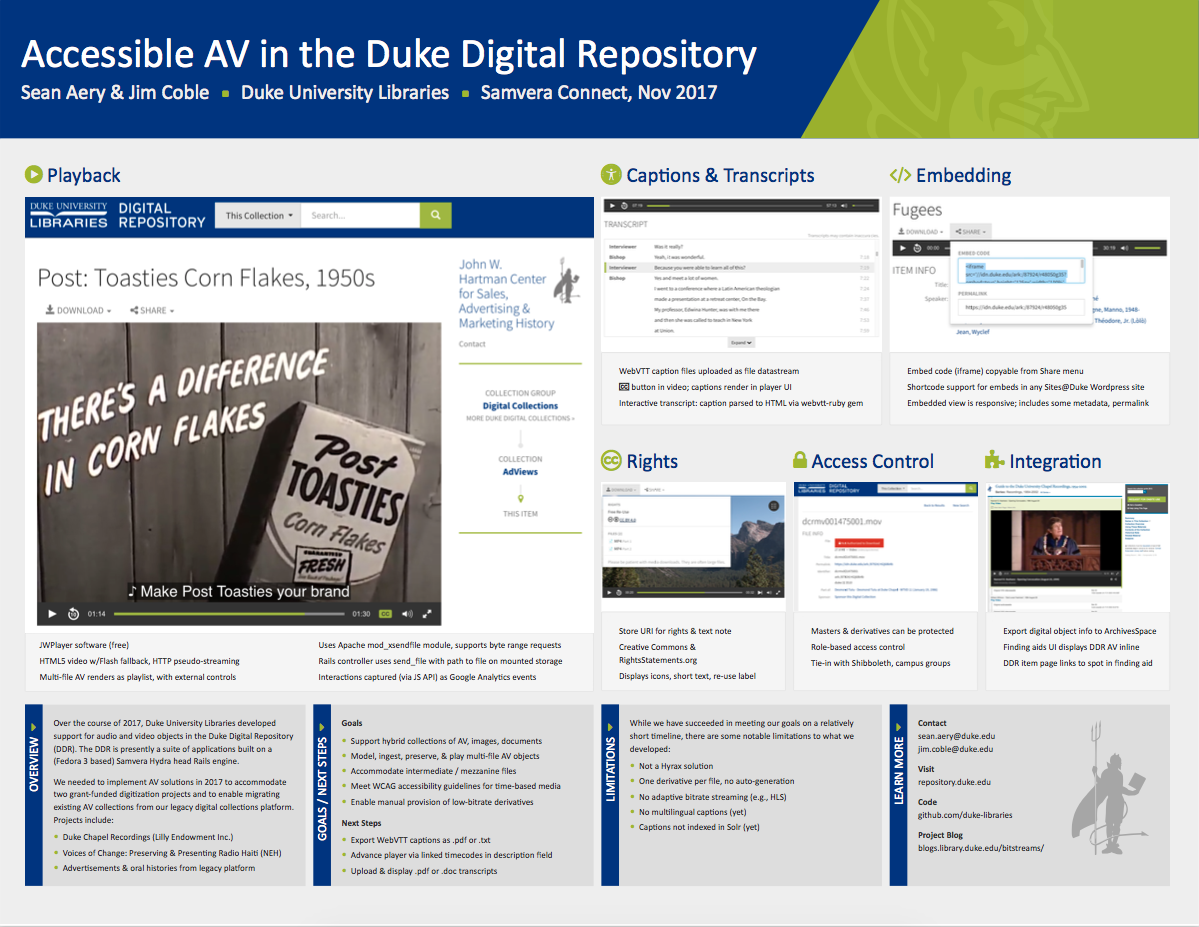

We look forward to sharing our recent AV development with our peers at the upcoming Samvera Connect conference (Nov 6-9, 2017 in Evanston, IL). Here’s our poster summarizing the work to date:

Looking ahead to the next couple months, we aim to round out the year by completing a few more AV-related features, most notably:

- Export WebVTT captions as PDF or .txt

- Advance the player via linked timecodes in the description field in an item’s metadata

- Improve workflows for uploading caption files and transcript documents

Now that these features are in place, we’ll be sharing a bunch of great new AV collections soon!