In previous posts I have referred to the FADGI standard for still image capture when describing still image creation in the Digital Production Center in support of our Digital Collections Program. We follow this standard in order to create archival files for preservation, long-term retention and access to our materials online. These guidelines help us create digital content in a consistent, scalable and efficient way. The most common cited part of the standard is the PPI guidelines for capturing various types of material. It is a collection of charts that contain various material types, physical dimensions and recommended capture specifications. The charts are very useful and relatively easy to read and understand. But this standard includes 93 “exciting” pages of all things still image capture including file specifications, color encoding, data storage, physical environment, backup strategies, metadata and workflows. Below I will boil down the first 50 or so pages.

The FADGI standard was built using the NARA Technical Guideline for Digitizing Archival Materials for Electronic Access: Creation of Production Master Files – Raster Images which was established in 2004. The FADGI standard for still image capture is meant to be a set of best practices for cultural heritage institutions and has been recently updated to include new advances in the field of still image capture and contains more approachable language than its predecessor.

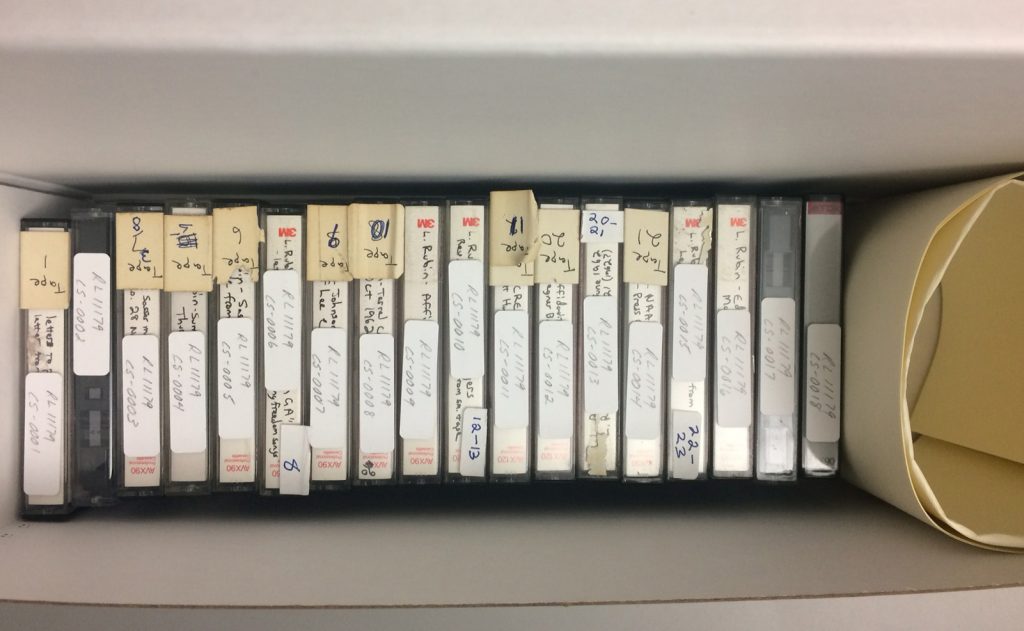

Full disclosure. Perkins Library and our digitization program didn’t start with any part of these guidelines in place. In fact, these guidelines didn’t exist at the time of our first attempt at in-house digitization in 1993. We didn’t even have an official digitization lab until early 2005. We started with one Epson flatbed scanner and one high end CRT monitor. As our Digital Collections Program has matured, we have been able to add equipment and implement more of the standard starting with scanner and monitor calibration and benchmark testing of capture equipment before purchase. We then established more consistent workflows and technical metadata capture, developed a more robust file naming scheme, file movement and data storage strategies. We now work hard to synchronize our efforts between all of the departments involved in our Digital Collections Program. We are always refining our workflows and processes to become more efficient at publishing and preserving Digital Collections.

Dive Deep. For those of you who would like to take a deep dive into image capture for cultural heritage institutions, here is the full standard. For those of you who don’t fall into this category, I’ve boiled down the standard below. I believe that it’s necessary to use the whole standard in order for a program to become stable and mature. As we did, this can be implemented over time.

Boil It Down. The FADGI standard provides a tiered approach for still image capture, from 1 to 4 stars, with four stars being the highest. The 1 and 2 star tiers are used when imaging for access and tiers 3 and 4 are used for archival imaging and preservation at the focus.

The physical environment: The environment should be color neutral. Walls should be painted a neutral gray to minimize color shifts and flare that might come from a wall color that is not neutral. Monitors should be positioned to avoid glare on the screens (This is why most professional monitors have hoods). Overhead lighting should be around 5000K (Tungsten, florescent and other bulbs can have yellow, magenta and green color shifts which can affect the perception of the color of an image). Each capture device should be separated so that light spillover doesn’t affect another capture device.

Monitors and Light boxes and viewing of originals: Overhead light or a viewing booth should be set up for viewing of originals and should be a neutral 5000K. A light box used for viewing transmissive material should also be 5000K.

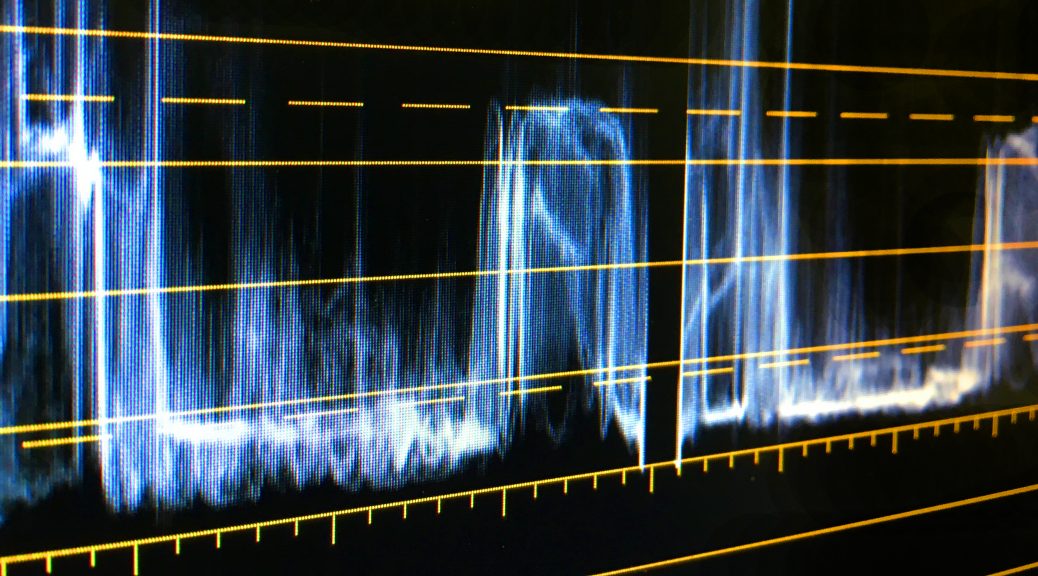

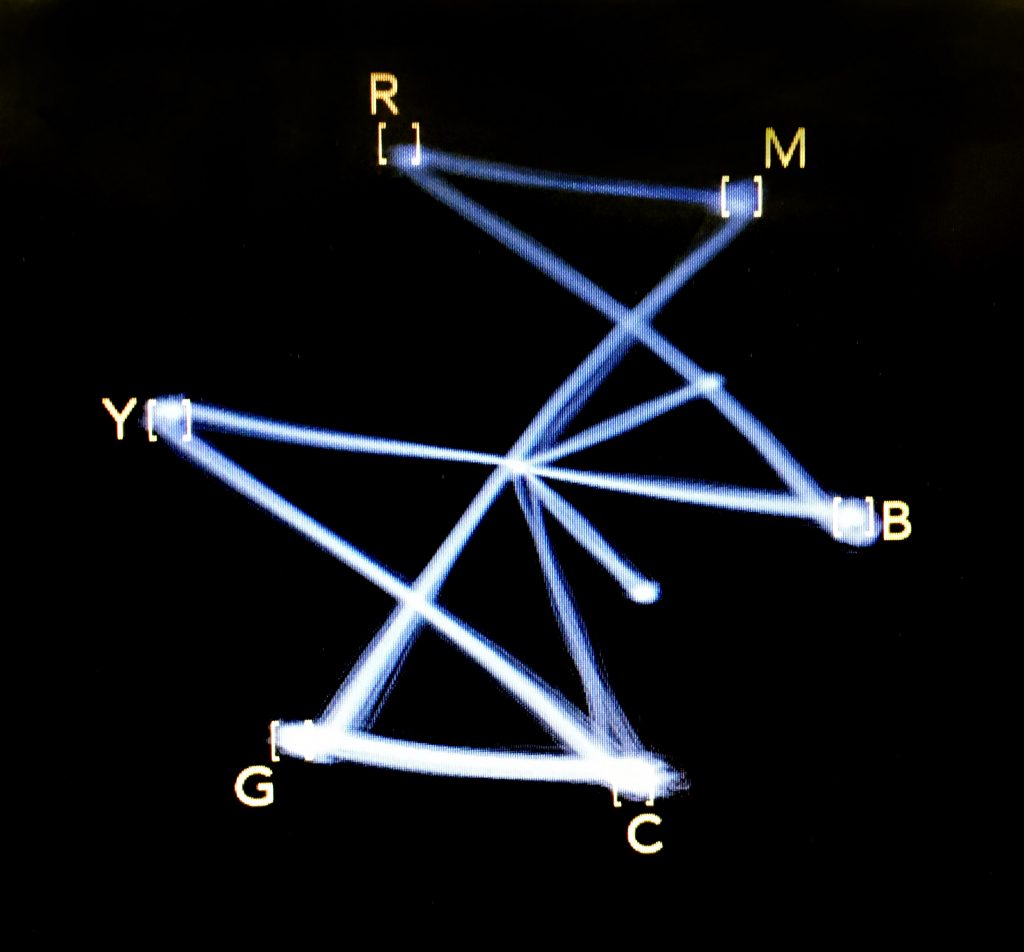

Digital images should be viewed in the colorspace they were captured in and the monitor should be able to display that colorspace. Most monitors display in the sRGB colorspace. However, professional monitors use the AdobeRGB colorspace which is commonly used in cultural heritage image capture. The color temperature of your monitor should be set to the Kelvin temperature that most closely matches the viewing environment. If the overhead lights are 5000K, then the monitor’s color temperature should also be set to 5000K.

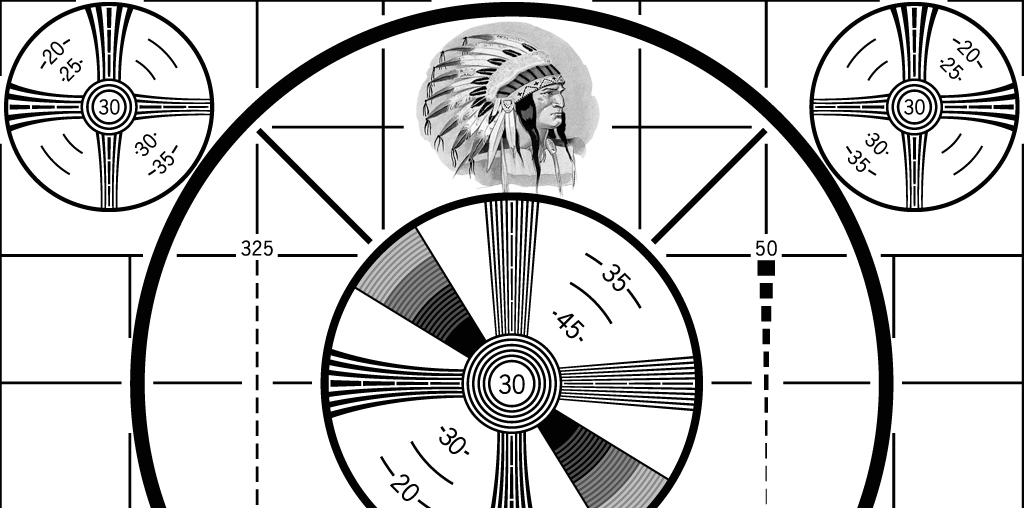

Calibrating packages that consist of hardware and software that read and evaluate color is an essential piece of equipment. These packages normalize the luminosity, color temperature and color balance of a monitor and create an ICC display profile that is used by the computer’s operating system to display colors correctly so that accurate color assessment can be made.

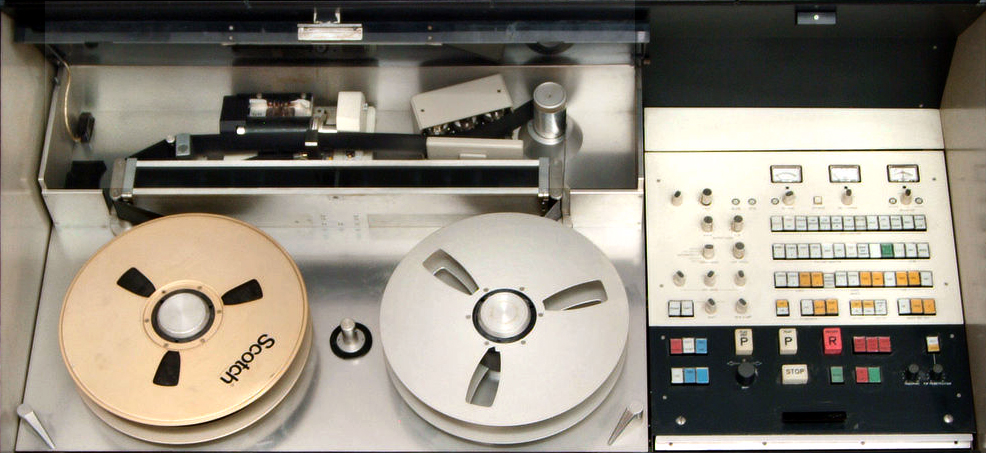

Capture Devices: The market is flooded with capture devices of varying quality. It is important to do research on any new capture device. I recommend skipping the marketing schemes that tout all the bells and whistles and just stick to talking to institutions that have established digital collections programs. This will help to focus research on the few contenders that will produce the files that you need. They will help you slog through how many megapixels are necessary, what lens are best for which application, what scanner driver is easiest to use while balanced with getting the best color out of your scanner. Beyond the capture device, other things that come into play are effective scanner drivers that produce the most accurate and consistent results, upgrade paths for your equipment and service packages that help maintain your equipment.

Capture Specifications: I’ll keep this part short because there are a wide variety of charts covering many formats, capture specifications and their corresponding tiers. Below I have simplified the information from the charts. These specification hover between tier 3 and 4 mostly leaning toward 4.

Always use a FADGI compliant reference target at the beginning of a session to ensure the capture device is within acceptable deviation. The target values differ depending on which reference targets are used. Most targets come with a chart representing numerical value of each swatch in the target. Our lab uses a classic Gretagmacbeth target and our acceptable color deviation is +/- 5 units of color.

Our general technical specs for reflective material including books, documents, photographs and maps are:

- Master File Format: TIFF

- Resolution: 300 ppi

- Bit Depth: 8

- Color Depth: 24 bit RGB

- Color Space: Adobe 1998

These specifications generally follow the standard. If the materials being scanned are smaller than 5×7 inches we increase the PPI to 400 or 600 depending on the font size and dimensions of the object.

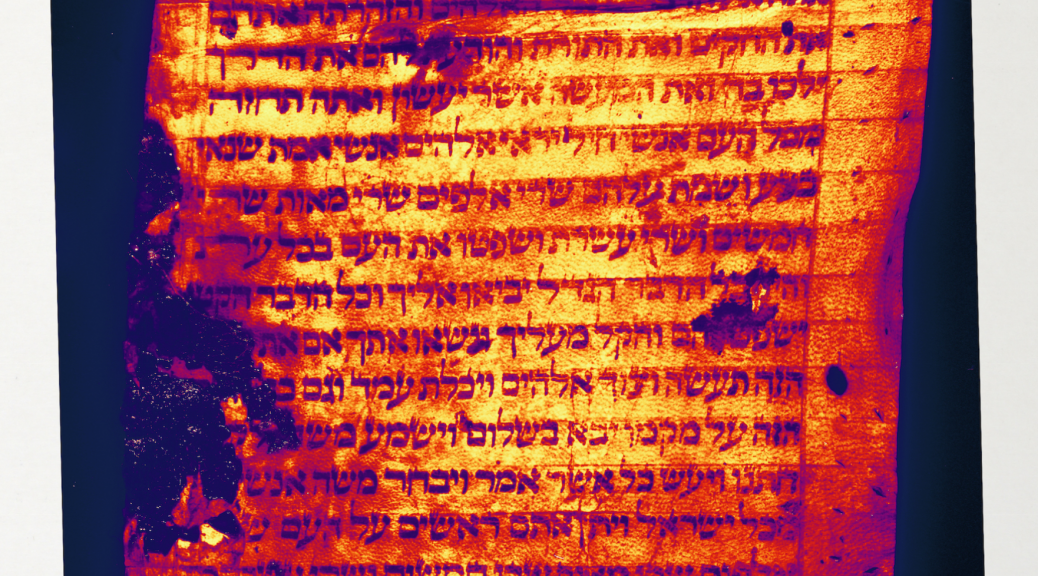

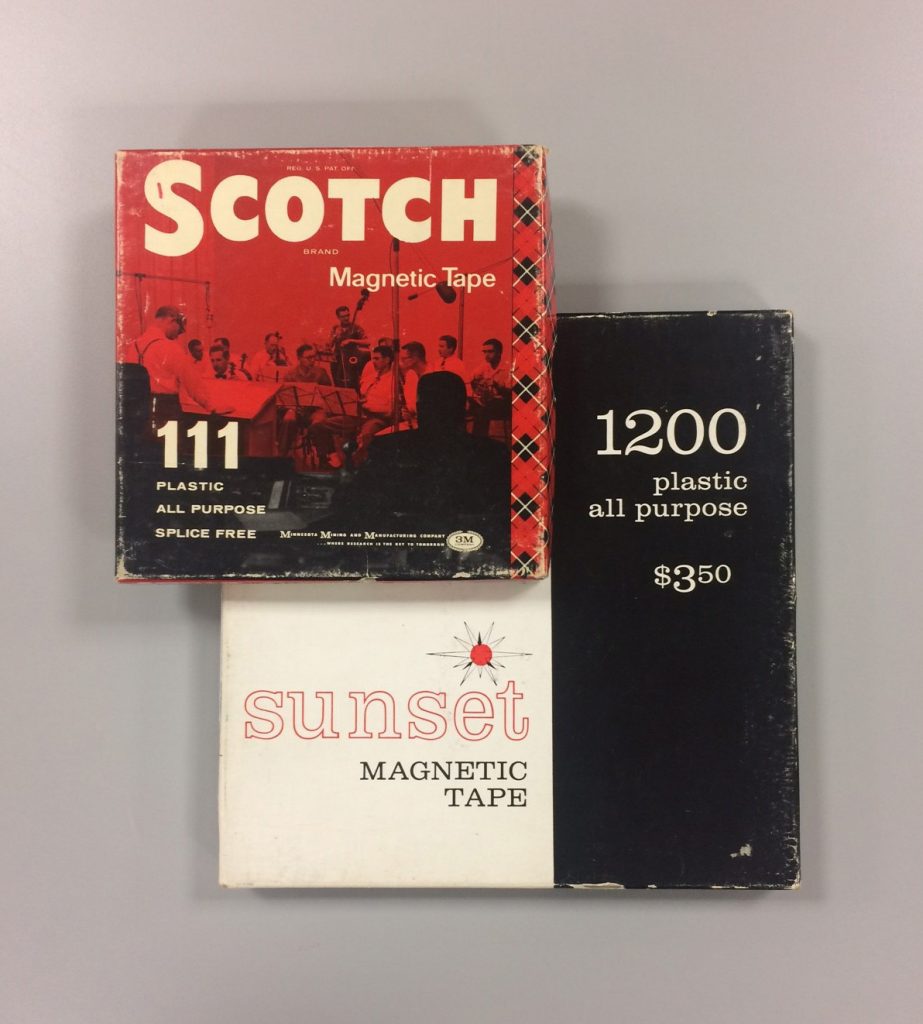

Our general technical specs for transmissive material including acetate, nitrate and glass plate negatives, slides and other positive transmissive material are:

- Master File Format: TIFF

- Resolution: 3000 – 4000 ppi

- Bit Depth: 16

- Color Depth: 24 bit RGB

- Color Space: Adobe 1998

These specifications generally follow the standard. If the transmissive materials being scanned are larger than 4×5 we decrease the PPI to 1500 or 2000 depending on negative size and condition.

Recommended capture devices: The standard goes into detail on what capture devices to use and not to use when digitizing different types of material. It describes when to use manually operated planetary scanners as opposed to a digital scan back, when to use a digital scan back instead of a flatbed scanner, when and when not to use a sheet fed scanner. Not every device can capture every type of material. In our lab we have 6 different devices to capture a wide variety of material in different states of fragility. We work with our Conservation Department when making decisions on what capture device to use.

General Guidelines for still image capture

- Do not apply pressure with a glass platen or otherwise unless approved by a paper conservator.

- Do not use vacuum boards or high UV light sources unless approved by a paper conservator.

- Do not use auto page turning devices unless approved by a paper conservator.

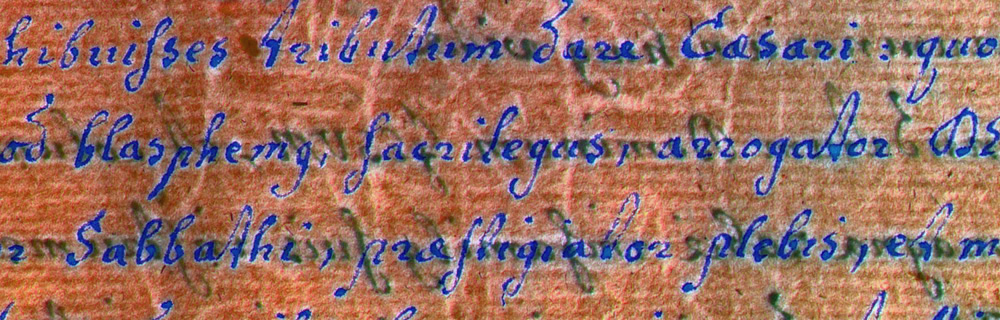

- For master files, pages, documents and photographs should be imaged to include the entire area of the page, document or photograph.

- For bound items the digital image should capture as far into the gutter as practical but must include all of the content that is visible to the eye.

- If a backing sheet is used on a translucent piece of paper to increase contrast and readability, it must extend beyond the edge of the page to the end of the image on all open sides of the page.

- For master files, documents should be imaged to include the entire area and a small amount beyond to define the area.

- Do not use lighting systems that raise the surface temperature of the original more than 6 degrees F(3 degrees C)in the total imaging process.

- When capturing oversized material, if the sections of a multiple scan item are compiled into a single image, the separate images should be retained for archival and printing purposes.

- The use of glass or other materials to hold photographic images flat during capture is allowed, but only when the original will not be harmed by doing so. Care must be taken to assure that flattening a photograph will not result in emulsion cracking, or the base material being damaged. Tightly curled materials must not be forced to lay flat.

- For original color transparencies, the tonal scale and color balance of the digital image should match the original transparency being scanned to provide accurate representation of the image.

- When scanning negatives, for master files the tonal orientation may be inverted to produce a positive The resulting image will need to be adjusted to produce a visually-pleasing representation. Digitizing negatives is very analogous to printing negatives in a darkroom and it is very dependent on the photographer’s/ technician’s skill and visual literacy to produce a good image. There are few objective metrics for evaluating the overall representation of digital images produced from negatives.

- The lack of dynamic range in a film scanning system will result in poor highlight and shadow detail and poor color reproduction.

- No image retouching is permitted to master files.

These details were pulled directly from the standard. They cover a lot of ground but there are always decisions to be made that are uniquely related to the material to be digitized. There are 50 or so more pages of this standard related to workflow, color management, data storage, file naming and technical metadata. I’ll have to cover that in my next blog post.

The FADGI standard for still image capture is very thorough but also leaves room to adapt. While we don’t follow everything outlined in the standard we do follow the majority. This standard, years of experience and a lot of trial and error have helped make our program more sound, consistent and scalable.